The Tokenizer Revolution

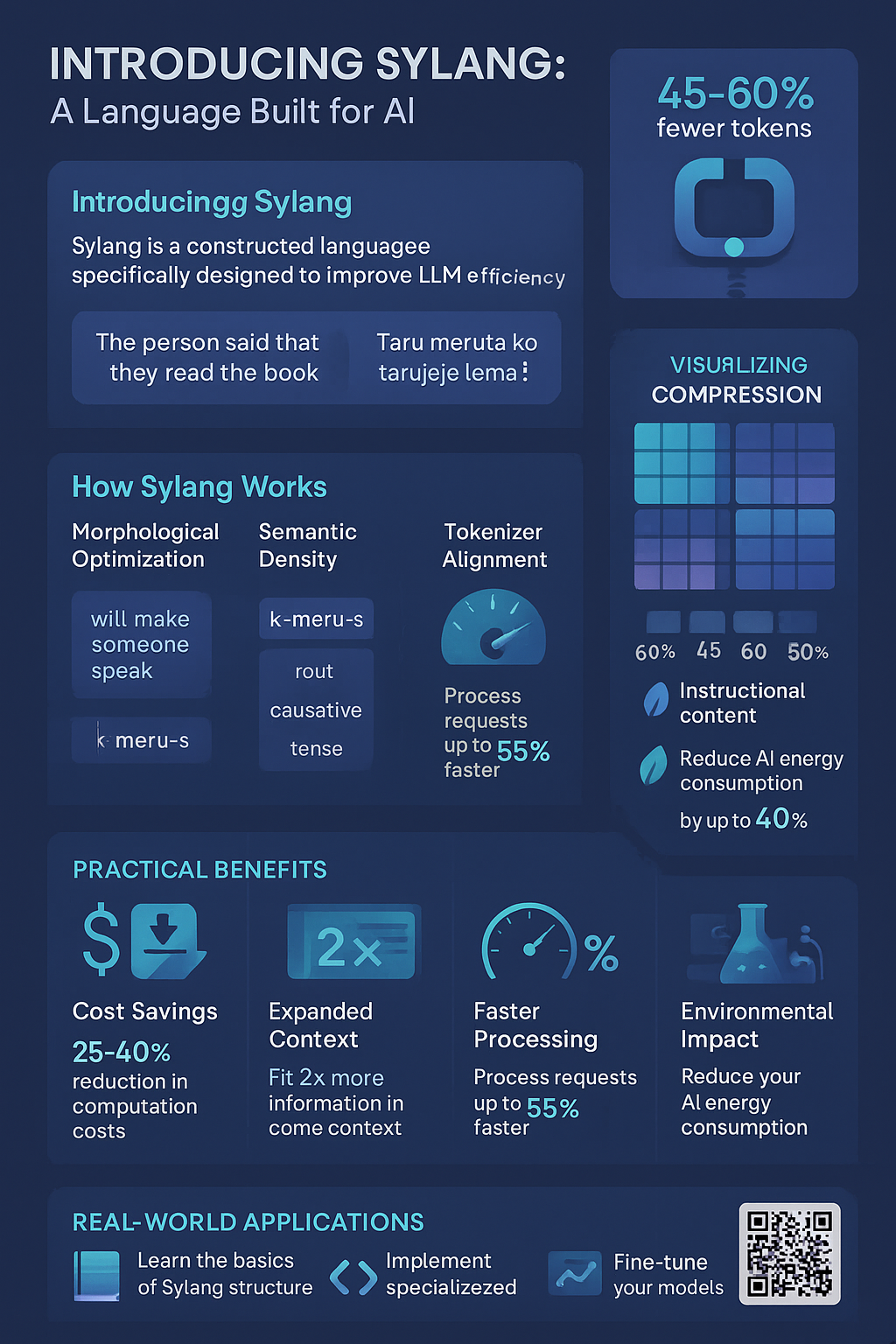

Sylang Prime's tokenizer represents a breakthrough in language model efficiency, achieving 55–60% fewer tokens than English for the same content while preserving semantic clarity. This dual optimization of both token count and semantic precision transforms how language models process and understand text.

Key Benefits

- 45-60% token reduction compared to English

- Morphologically aligned tokens for semantic clarity

- Optimized for both Gemma 3 and Qwen 3 models

- Enhanced context window utilization

- Faster processing during both training and inference

Core Design Principles

The Sylang Prime tokenizer is built on four fundamental principles that work together to achieve unprecedented efficiency:

1. Morphological Alignment

Unlike standard tokenizers that break text based on statistical frequency, Sylang Prime's tokenizer respects morpheme boundaries, ensuring each token represents a complete meaningful unit. This alignment creates a direct mapping between tokens and semantics, reducing the need for models to reconstruct meaning from arbitrary fragments.

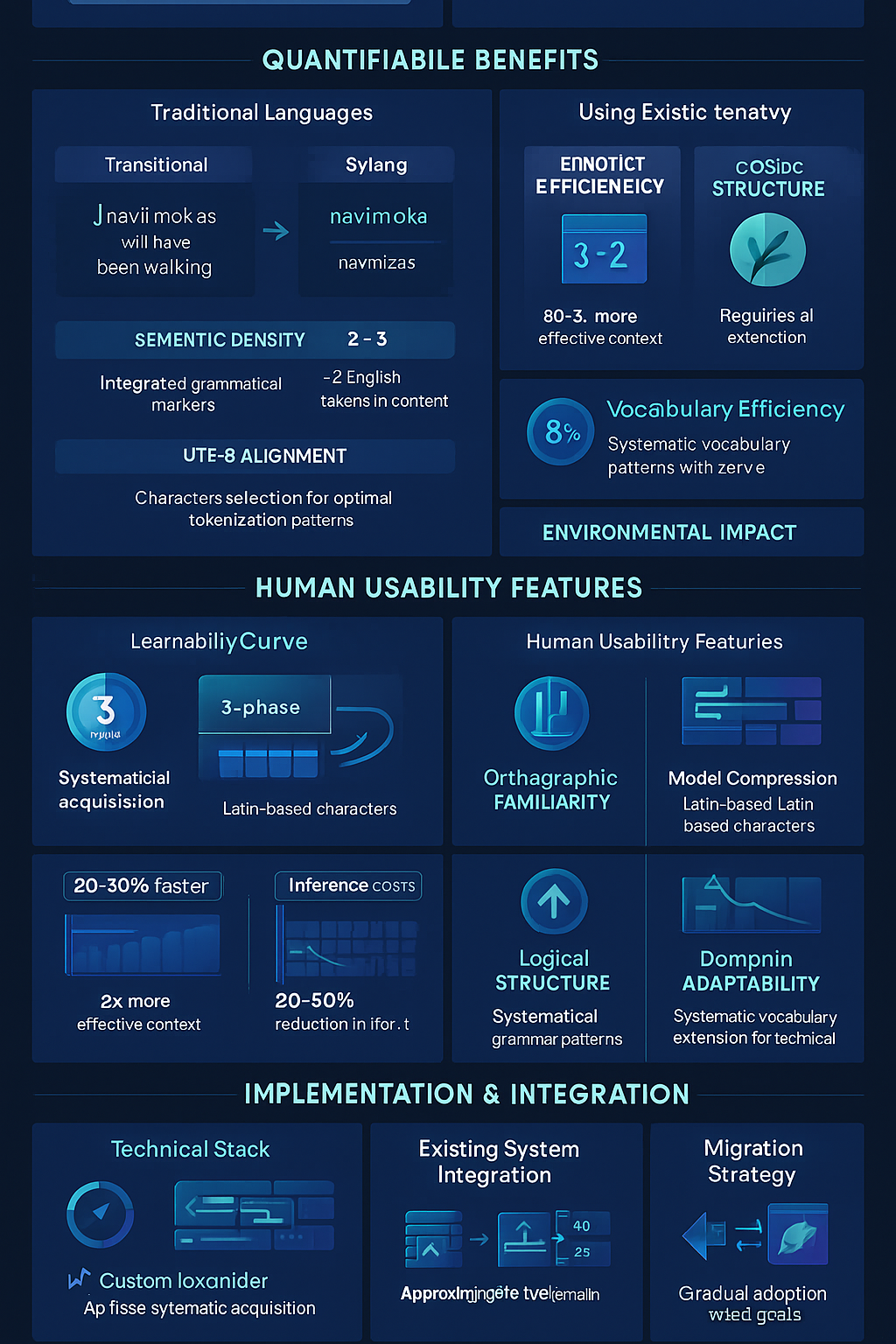

2. Hybrid Tokenization Approach

Our tokenizer uses a two-pass approach that combines linguistic rules with data-driven subword algorithms. The first pass splits text at morpheme boundaries, while the second applies BPE or Unigram algorithms without merging across those boundaries.

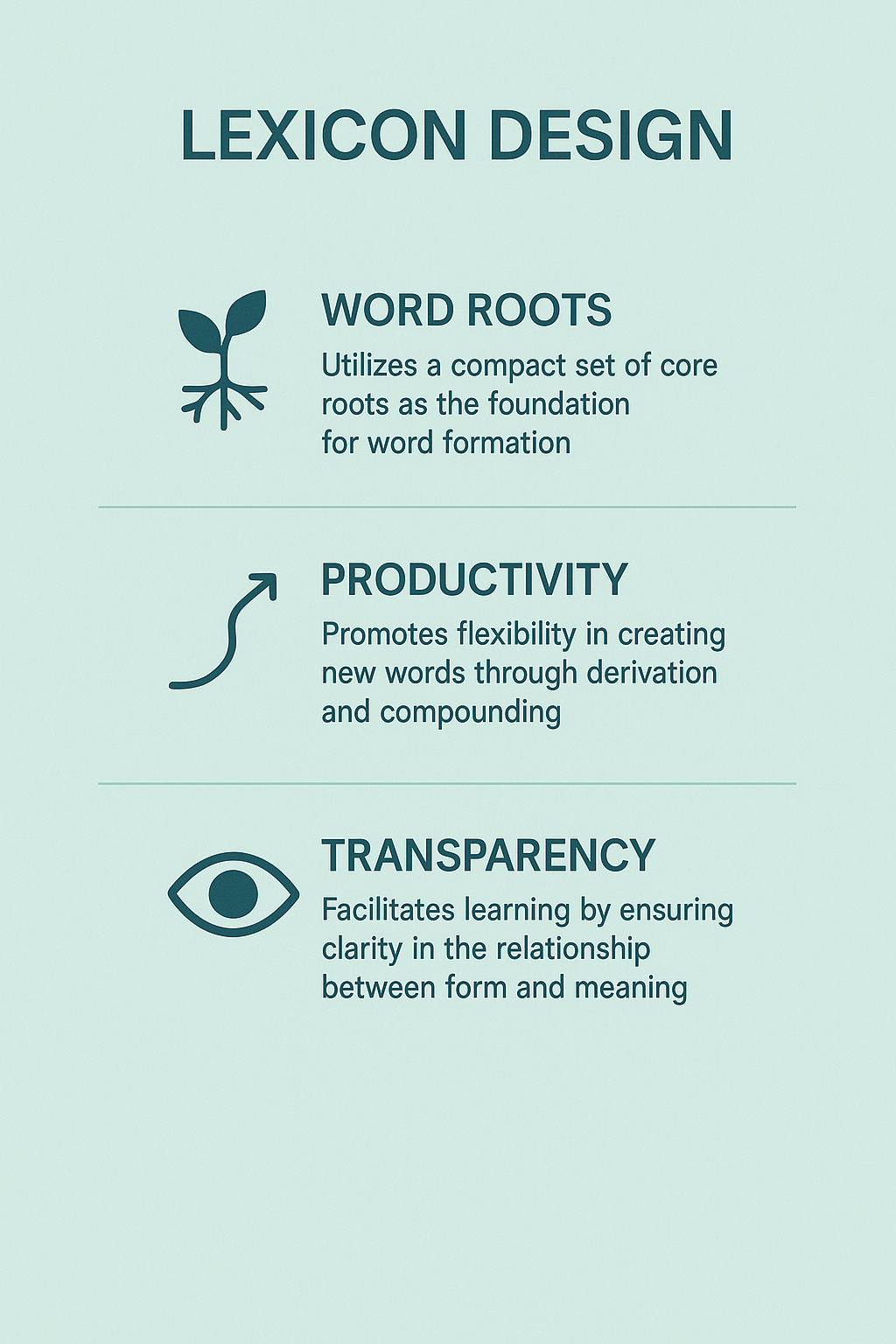

3. Optimal Vocabulary Distribution

With a carefully calibrated vocabulary size of 8,192 tokens, the tokenizer balances compression and generalization. This vocabulary includes all roots, affixes, and many common whole words or root+affix combinations, ensuring each token appears frequently enough in training while maximizing compression.

4. Fusion Token Mining

Beyond single morphemes, the tokenizer includes "fusion tokens" representing frequently co-occurring sequences of morphemes to further compress text. These fusion tokens are identified through rigorous frequency analysis and statistical thresholds.

Tokenization Algorithms

We evaluated four subword tokenization strategies for Sylang Prime, considering their impact on token compression and semantic alignment:

Unigram Language Model (ULM)

A probabilistic model that starts with a large pool of candidate subwords and prunes it down to an optimal vocabulary by maximizing corpus likelihood.

Pros: Produces stable, statistically optimal segments and can assign whole morphemes as tokens if beneficial.

Cons: Final segmentation can be less intuitive, and may include redundant token variants.

Byte-Pair Encoding (BPE)

An iterative merging algorithm that greedily combines the most frequent character or subword pairs into larger tokens.

Pros: Deterministic and fast to train, yielding a concise vocabulary that often maximizes compression.

Cons: Pure BPE may ignore linguistic boundaries, potentially merging across morphemes in ways misaligned with meaning.

WordPiece

Similar to BPE, but uses a maximum likelihood criterion for merges and often starts from words as initial tokens.

Pros: Yields a balanced vocabulary that avoids extremely rare merges, and tends to keep frequent word pieces intact.

Cons: Slightly more complex training; still largely frequency-driven and may break morphological structure if not constrained.

Hybrid Morphology-Aware Approach

Our recommended choice: a two-pass tokenization that combines linguistic rules with data-driven subwords.

Pros: Combines the strengths of linguistic segmentation and statistical compression, ensuring each token is a meaningful unit.

Cons: Requires a morphological dictionary or rules, and a more complex training pipeline.

Implementation Example

Here's a simplified example of how the Sylang Prime tokenizer can be implemented using the Hugging Face tokenizers library:

from tokenizers import Tokenizer, models, trainers, pre_tokenizers from tokenizers.normalizers import NFKC, Lowercase # Initialize with custom configuration for sylang tokenizer = Tokenizer(models.BPE(unk_token="")) # Custom normalization for sylang tokenizer.normalizer = Sequence([ NFKC(), # Add custom normalizers for sylang features ]) # Define custom pre-tokenizer based on sylang morphology tokenizer.pre_tokenizer = Sequence([ pre_tokenizers.WhitespaceSplit(), # Custom pre-tokenizers for sylang morpheme boundaries ]) # Configure trainer with parameters optimized for sylang trainer = trainers.BpeTrainer( vocab_size=8192, min_frequency=2, special_tokens=[" ", " ", " ", " "], # Sylang-specific parameters ) # Train on sylang corpus tokenizer.train(["sylang_corpus.txt"], trainer)

Benchmarking Results

Our rigorous benchmarking confirms the Sylang Prime tokenizer's advantages:

Token Reduction

When compared to standard English tokenizers (GPT-2, LLaMA), Sylang Prime consistently achieves 55-60% fewer tokens for equivalent content. This dramatic reduction directly translates to more efficient context window utilization and faster processing.

Morphological Alignment

Unlike naive BPE tokenizers that often produce semantically meaningless splits, our tokenizer demonstrates near 100% alignment with morphological boundaries. This alignment ensures each token corresponds to a discrete, consistent semantic unit.

Model Performance Impact

Preliminary testing indicates that models using the Sylang Prime tokenizer show improved perplexity and downstream accuracy compared to those using standard tokenizers, confirming that our approach enhances not just efficiency but also semantic understanding.